Metadata: The Force-Multiplier for Geospatial Intelligence

Why agencies that bet on gold-standard metadata frameworks pull ahead of the pack.

A Flood of Pixels, a Scarcity of Context

Geospatial data has become a strategic asset across sectors in 2025. From military operations to smart city planning, organizations are awash in imagery, sensor feeds, maps, and location data. At the GEOINT 2024 symposium, experts underscored that geospatial information is “integral to national security, urban planning, disaster response and many other areas that require precise, scalable and rapid insights.”

The ability to integrate massive volumes of spatial data from diverse sources can translate into quicker crisis responses and better strategic decisions for decision-makers.

However, the surge of data also brings challenges: without proper structure and metadata, valuable data can languish unused in silos or “data swamps.”

Business and technical leaders are recognizing that simply accumulating geospatial data isn’t enough – how that data is organized and described will determine whether it yields actionable intelligence or overwhelms analysts.

- Ranvijay Pratap Singh, VP - Sales, Skymap Global India Pvt Ltd.

*Skymap Global India Pvt Ltd. is the exclusive distributor of Aetosky in India.

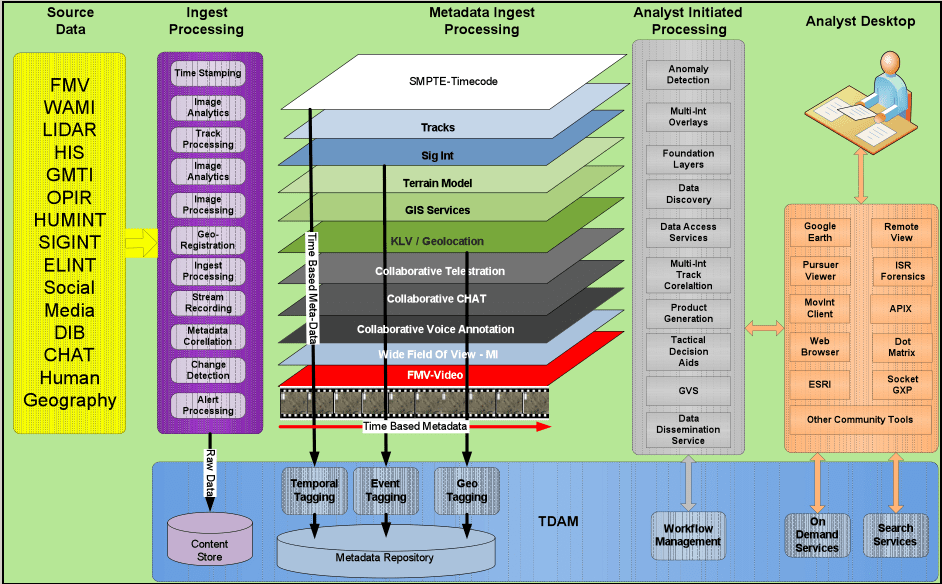

Metadata: The Key to Defense Intelligence

In both defense and urban contexts, metadata – the data about the data – is proving to be the linchpin for making geospatial information useful. The U.S. Department of Defense emphasizes that “metadata is a key component to successfully provide meaningful context and quality to data… necessary for search and discovery of data.”1

In military intelligence, every image, intercept, or report comes with critical tags (location, time, sensor type, classification, etc.) that enable analysts and AI systems to discover, correlate, and fuse information rapidly. When applied correctly, this metadata “aids mission success,” allowing decisions to be made “more rapidly than adversaries are able to adapt.”

For example, a defense analyst can quickly find the latest satellite image of a specific coordinate or filter drone footage by date and sensor, provided those assets are richly described with standardized metadata. Without consistent metadata, that same task becomes like finding a needle in a haystack – a dangerous delay in a fast-moving mission.

Metadata: The Key to Urban Intelligence

Metadata’s impact is equally profound in urban planning and smart city management. City agencies deal with layers of geospatial data – zoning maps, traffic sensor readings, utility networks, demographic maps – often maintained by different departments. Here, robust metadata and data standards make data discoverable and interoperable across the enterprise. A Spatial Data Infrastructure (SDI) approach, like what we built for Arunachal Pradesh, for instance, relies on data catalogs and common metadata standards so that planners can easily find and use data from any source.

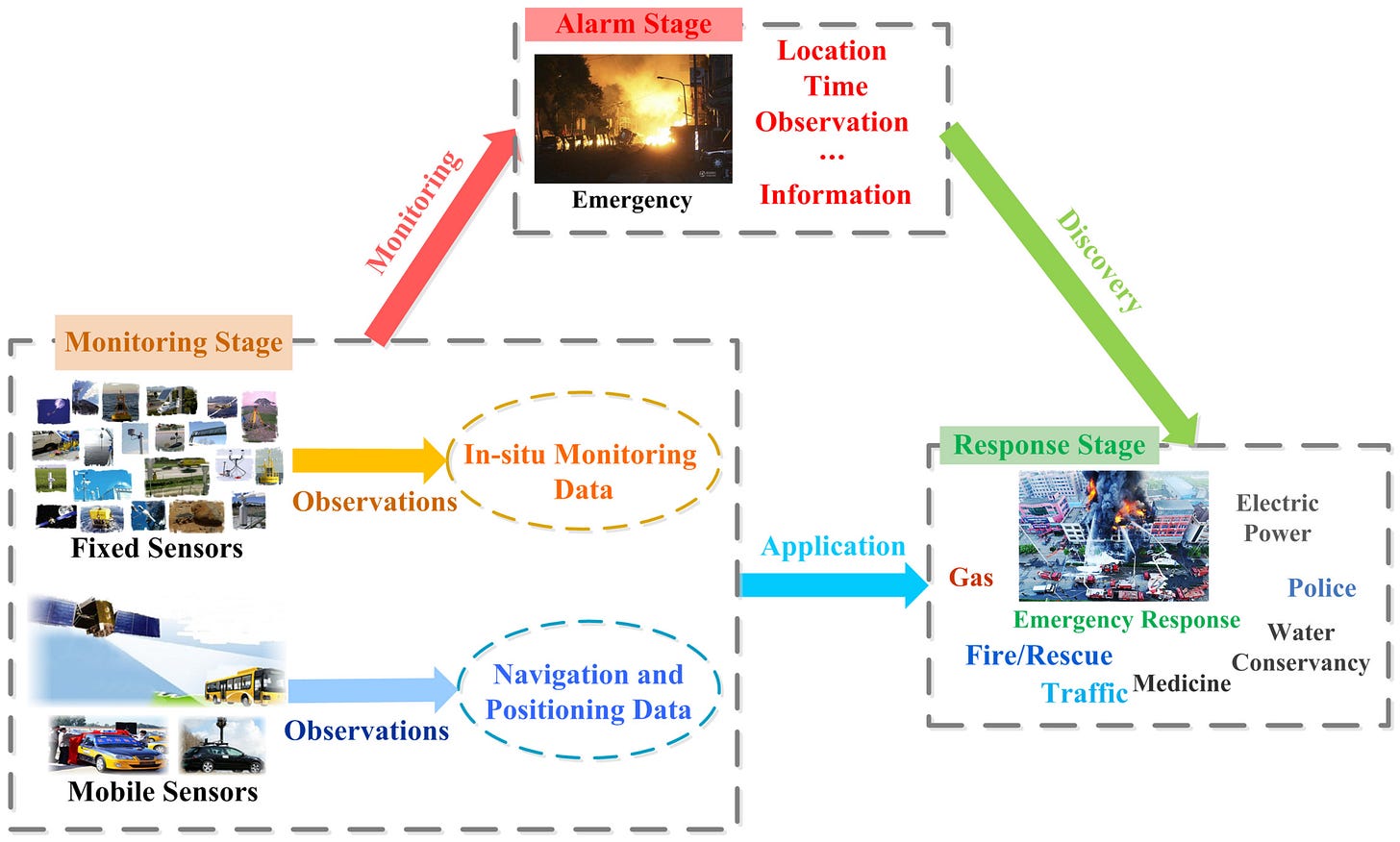

A city with a well-curated geospatial data catalog can, for example, quickly assemble all relevant datasets (imagery, flood zone maps, real-time rainfall data) during an emergency response. Conversely, without metadata, critical datasets might be overlooked or misinterpreted. The lesson is clear: good metadata governance turns raw geospatial data into a readily exploitable asset, whether on the battlefield or in urban infrastructure development.

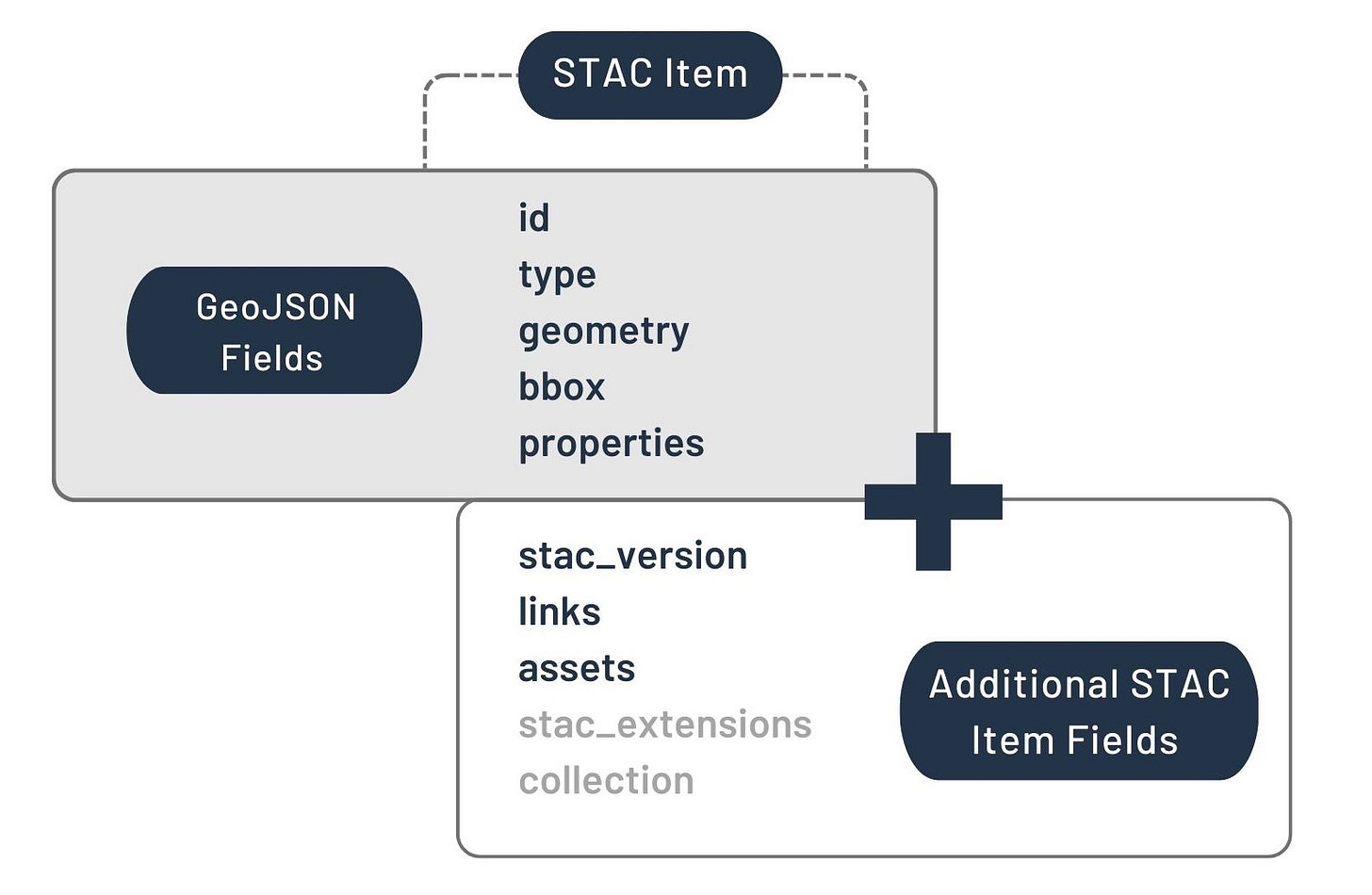

Why Open Standards Like STAC Are Critical

With so many geospatial assets in play, standards for data and metadata have become critical. One standout is the SpatioTemporal Asset Catalog (STAC), an open specification that in a few short years has emerged as a de facto standard for describing geospatial data. STAC provides a common language for geospatial metadata, allowing imagery, maps, sensor data, and more to be indexed and queried in a consistent way. 2

The impact of STAC on the community has been significant: by the time STAC reached version 1.0 in 2021, it already had “significant adoption across government and commercial organizations,” born out of a broad collaboration to solve a real pain point. Previously, each satellite or dataset came with its own unique metadata schema, forcing users to learn bespoke formats or use custom software for each source.

“There was no standard method… every dataset’s metadata had its own peculiarities… no single software tool to search and access the data, ultimately placing the burden on the data user.”

STAC changed that by defining a uniform, JSON-based structure to capture an asset’s key properties (geographic footprint, timestamp, sensor details, etc.) along with links to the data.

The result is a more cloud-native, interoperable geospatial ecosystem. Data providers large and small have embraced STAC – dozens of major organizations from NASA and USGS to commercial satellite firms have adopted STAC or built tools around it. Even Google Earth Engine updated its catalog to the STAC specification, demonstrating confidence in the standard.

The momentum continues into 2025: the Open Geospatial Consortium (OGC) is in the process of formally adopting STAC and the STAC API as an official Community Standard.3 This move signals to all geospatial stakeholders that STAC is production-ready and here to stay.

Why Should Organizations Care?

Adopting open standards like STAC confers very tangible advantages

For data providers, using a well-defined standard means they no longer need to invent (and maintain) proprietary catalog systems for their imagery or sensor feeds. A STAC-compliant catalog makes it easier to expose and share data – any application that understands STAC can readily index and fetch their assets.

For data consumers, the benefits are arguably even greater: instead of writing custom code for each vendor’s API or wrestling with mismatched metadata, they can use a common set of libraries and tools to search and retrieve data across many sources.

In a STAC-enabled data lake, an analyst could query “give me all images from provider X or Y over this city in the last 24 hours” in one go, rather than visiting multiple portals. Aetosky has already built successful LLMs to peruse through our data lakes to extract valuable insights from an organizations data. This interoperability saves time and reduces integration costs.

Moreover, STAC’s design is flexible: through an extension mechanism, it now supports not just satellite photos but a broad variety of geospatial content – from drone imagery and video to LiDAR point clouds and derived analytic layers

Building Structured Data Lakes for Analytics

Collecting oceans of geospatial data is one thing; organizing it for effective analytics is another challenge entirely. This is where the concept of a structured geospatial data lake comes in. A data lake is a centralized repository that can store raw data at scale – but without careful design it can turn into a dumping ground.

Our team specializes in building geospatial data lakes that avoid this fate by imposing structure, governance, and indexing on the data from day one. In practical terms, this means implementing a multi-zone architecture within the lake to balance flexibility with performance.

For example, raw data (imagery, drone video, sensor logs, etc.) is ingested and stored in its original form as a record of truth. Then a curated zone stores cleaned, standardized data (e.g. cloud-optimized GeoTIFF images, vector tiles, or normalized databases) with partitioning, indexing, and often in analysis-friendly formats. Finally, an analytics zone might contain pre-aggregated insights or AI-ready datasets (like daily change detection outputs or feature layers) for ultra-fast querying.

This tiered approach ensures that analysts and algorithms can hit the optimized data for routine queries while the raw data is still available for drill-down or re-processing as needed.

Through standard query interfaces (such as a STAC API or OGC APIs on top of the lake), users and applications can search the holdings without needing to know where or how the data is stored. A real-world illustration comes from the European Destination Earth initiative, where the data lake provides a STAC-compliant API so users can access datasets “irrespective of the source protocol or access method, ensuring that the code used for data manipulation remains independent of the data source.”

In essence, the data lake becomes a federated geospatial library – analysts ask a question (“what data do we have for this location and time frame?”) and the lake’s catalog answers with relevant items, ready for retrieval and analysis.

Conclusion: From Data Deluge to Decisive Advantage

The geospatial landscape is evolving from petabytes to exabytes, but raw volume is no longer the differentiator - context is. Defense commanders, city engineers, and corporate strategists all share the same imperative: transform torrents of pixels into insights that move the mission forward faster than events unfold. That transformation hinges on disciplined metadata, open standards such as STAC, and data-lake architectures engineered for discovery and speed.

Organizations that embrace this triad win twice. First, they collapse latency - analysts and AI systems can locate and fuse the right imagery or vector layer in seconds, not hours. Second, they future-proof their investments: every new sensor, satellite, or analytics model snaps cleanly into an interoperable ecosystem instead of spawning another proprietary silo.

Leaders who act now will convert geospatial data from an unwieldy cost center into a compounding strategic asset. Those who delay risk drowning in their own “data swamp” while faster rivals seize the terrain - both literal and competitive. If you’re ready to operationalize this vision, Aetosky stands ready to help architect, implement, and scale a metadata-first geospatial intelligence platform tailored to your mission.